Continuing from the framework to high level implementation here

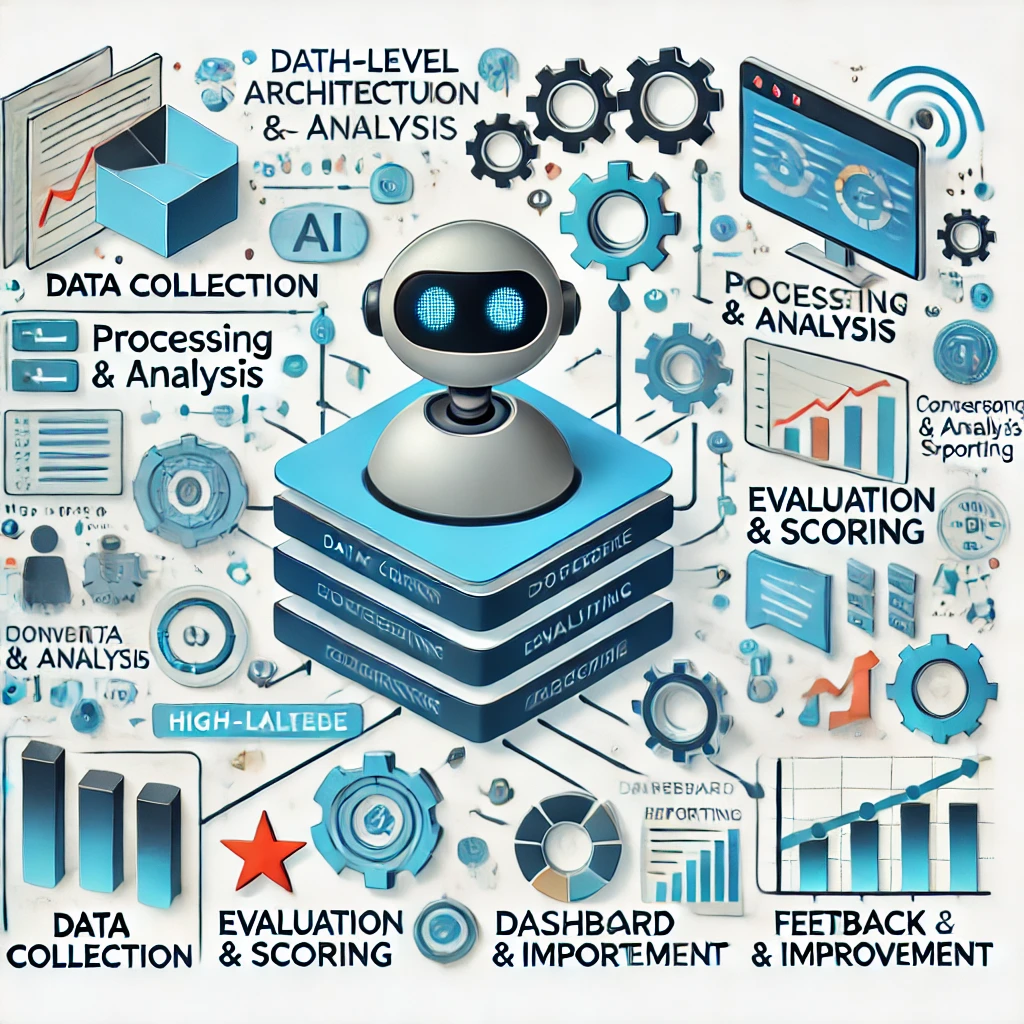

The following high-level design outlines the architecture and components needed to implement the Conversational AI Evaluation Framework, focusing on four key metrics: Dialogue Management Effectiveness (DME), Contextual Memory Coherence (CMC), Conversational Planning Ability (CPA), and Component Synergy (CS).

1. System Architecture Overview

Data Collection Layer

Components:

Interaction Logger: Captures all user and AI interactions in real-time, including user inputs, AI responses, and metadata.

Performance Tracker: Logs system performance metrics such as response time, memory usage, and API call success rates.

Purpose: To gather raw data necessary for evaluating various metrics.

Processing and Analysis Layer

Components:

Metric Calculators:

DME Calculator: Evaluates intent recognition, response appropriateness, and conversation flow.

CMC Calculator: Measures context retention, anaphora resolution, and memory recall efficiency.

CPA Calculator: Assesses goal alignment, adaptability, and execution success.

CS Calculator: Analyzes the efficiency of component interactions and integration.

Automated Analysis Engine: Processes raw interaction data using predefined algorithms to compute evaluation metrics.

Evaluation and Scoring Layer

Components:

Metric Scoring Module: Assigns scores to each metric based on the data processed by the calculators.

LLM-Assisted Evaluator: Uses pre-trained Large Language Models to provide qualitative assessments for complex scenarios where automated metrics are insufficient.

Hybrid Score Aggregator: Combines automated and LLM-derived scores into a final evaluation score for each metric.

Dashboard and Reporting Layer

Components:

Real-time Dashboard: Visualizes evaluation metrics and trends over time, allowing stakeholders to monitor system performance.

Report Generator: Produces detailed reports summarizing key findings, strengths, and areas for improvement.

Feedback and Improvement Layer

Components:

Feedback Loop Manager: Collects user and stakeholder feedback and integrates it into the evaluation process.

Continuous Improvement Module: Uses evaluation results to suggest specific areas for model retraining, component optimization, and workflow adjustments.

2. Component Details

2.1 Data Collection Layer

Interaction Logger:

Captures every interaction between users and the conversational agent.

Logs user inputs, AI responses, timestamps, and any relevant metadata.

Performance Tracker:

Monitors real-time system performance, including response latency and API utilization.

Tracks memory usage, server load, and other operational metrics.

2.2 Processing and Analysis Layer

DME Calculator:

Uses Natural Language Processing (NLP) techniques to evaluate intent recognition and response quality.

Compares user intents to predefined benchmarks for accuracy.

CMC Calculator:

Employs context tracking algorithms to evaluate the AI’s ability to retain and utilize conversation history.

Uses latency measures to assess memory retrieval speed.

CPA Calculator:

Breaks down user goals into sub-tasks and checks AI’s alignment and adaptability.

Tracks error rates in executing planned actions.

CS Calculator:

Analyzes logs for efficient data flow between components.

Detects and logs any conflicts or inconsistencies in component interactions.

2.3 Evaluation and Scoring Layer

Metric Scoring Module:

Uses predefined formulas to compute scores for each metric.

Assigns weights to different sub-metrics based on their importance.

LLM-Assisted Evaluator:

Uses prompts and predefined criteria to get qualitative assessments from LLMs.

Combines multiple LLM evaluations to ensure reliability.

Hybrid Score Aggregator:

Blends automated and LLM scores using weighted formulas.

Adjusts weights dynamically based on evaluation context and use case.

2.4 Dashboard and Reporting Layer

Real-time Dashboard:

Displays scores, trends, and anomalies in real-time.

Provides filters and drill-down capabilities to analyze specific aspects of performance.

Report Generator:

Summarizes findings in a structured format.

Provides actionable insights and recommendations based on evaluation results.

2.5 Feedback and Improvement Layer

Feedback Loop Manager:

Collects user feedback and evaluation results to identify problem areas.

Facilitates collaboration between AI developers and evaluators.

Continuous Improvement Module:

Suggests model retraining or system adjustments based on evaluation results.

Uses machine learning to adapt and refine the evaluation process over time.

3. Implementation Steps

Setup and Configuration:

Define evaluation criteria and metrics specific to the use case.

Configure logging and data collection for interaction and performance tracking.

Data Integration and Processing:

Implement data pipelines to integrate and preprocess collected data.

Develop metric calculators and scoring modules for automated evaluation.

LLM Integration:

Develop prompt templates for qualitative assessments.

Implement LLM interfaces and configure evaluation criteria for LLMs.

Dashboard and Reporting:

Design and implement real-time dashboards.

Develop report templates and automate report generation.

Feedback Loop Setup:

Establish channels for collecting feedback from users and stakeholders.

Implement continuous improvement workflows based on evaluation results.

Testing and Iteration:

Test each component of the framework to ensure accurate data collection and evaluation.

Iterate and refine components based on testing and feedback.

4. Technology Stack Recommendations

Data Collection:

Logging: Fluentd, ELK Stack (Elasticsearch, Logstash, Kibana)

Storage: Amazon S3, Google Cloud Storage

Processing and Analysis:

Data Processing: Apache Spark, Pandas

Metric Calculation: Python, TensorFlow

Evaluation and Scoring:

LLM Integration: OpenAI GPT, Google BERT

Aggregation: Python, Scikit-Learn

Dashboard and Reporting:

Visualization: Grafana, Power BI, Tableau

Reporting: Jupyter Notebooks, Google Data Studio

Feedback and Improvement:

Feedback Collection: Google Forms, Slack Integrations

Continuous Improvement: Jenkins, GitLab CI/CD

Comments